← Preceded by Part 1

Finding consensus around what children’s rights to prioritise and in what way is a challenging and “morally sensitive domain”, as researcher Karl Hanson puts it. Different interpretations and ‘translations’ of children’s rights in the UNCRC can produce very different experiences for children, which have lifelong repercussions. The issues raised by the public debate around Snapchat’s AI chatbot are also polarising.

To help analyse these different perspectives I’ve created illustrative accounts from four individuals:

- Sam, a child using an AI assistant;

- Sam’s parent;

- Sam’s AI assistant;

- And Sam’s government.

Grounding this exploration will be three potential conversations between Sam and an AI assistant, representing changing experiences and thoughts over time. The following is not a definitive representation of the majority of experiences of children and parents in the UK (the government I will focus on). However, I aim to provide an overview of the complexities of the lived experience, digital literacy and needs of children across ages.

What have I based these different perspectives on?

I’ve drawn primarily on findings from the following set of existing research with children and parents and available public resources.

For Sam and their parent’s perspective:

- Children and parents: media use and attitudes report 2022 [Ofcom, 2022]

- Our rights in the digital world: A report on the children’s consultations to inform UNCRC General Comment 25 [Amanda Third and Lilly Moody, Western Sydney University and 5 Rights Foundation, 2021]

- Parenting in the Digital Age. The Challenges of Parental Responsibility in Comparative Perspective [Sonia Livingstone (LSE) and Jasmina Byrne (UNICEF), Nordicom, 2018]

- Policy guidance on AI for children [UNICEF, 2021]

- Adolescent Perspectives on Artificial Intelligence [UNICEF, 2021]

- A summary of the UN convention of the rights of the child [UNICEF]

For the AI assistant perspective:

- Early Learnings from My AI and New Safety Enhancements [Snapchat, 2023]

- Snap Group Limited Terms of Service [Snapchat, 2021]

- Say Hi to My AI [Snapchat, 2023]

- SPS 2023: What’s Next for My AI [Snapchat, 2023]

- The A.I. Dilemma [YouTube, 2023]

- Policy guidance on AI for children [UNICEF, 2021]

For the government’s perspective:

- United Nations Convention on the Rights of the Child (UNCRC): how legislation underpins implementation in England [Gov.uk, 2010]

- National curriculum in England: computing programmes of study: The statutory programmes of study and attainment targets for computing at key stages 1 to 4 [Gov.uk, 2013]

- What rights do children have? [ICO]

- Introduction to the Children’s code [ICO]

- What are the rules about an ISS and consent? [ICO]

- What should our general approach to processing children’s personal data be? [ICO]

- General comment №25 (2021) on children’s rights in relation to the digital environment [OHCHR, 2021]

- How the government is protecting your rights [DfE poster, 2023]

- National Centre for Computing Education

- A summary of the UN convention of the rights of the child [UNICEF]

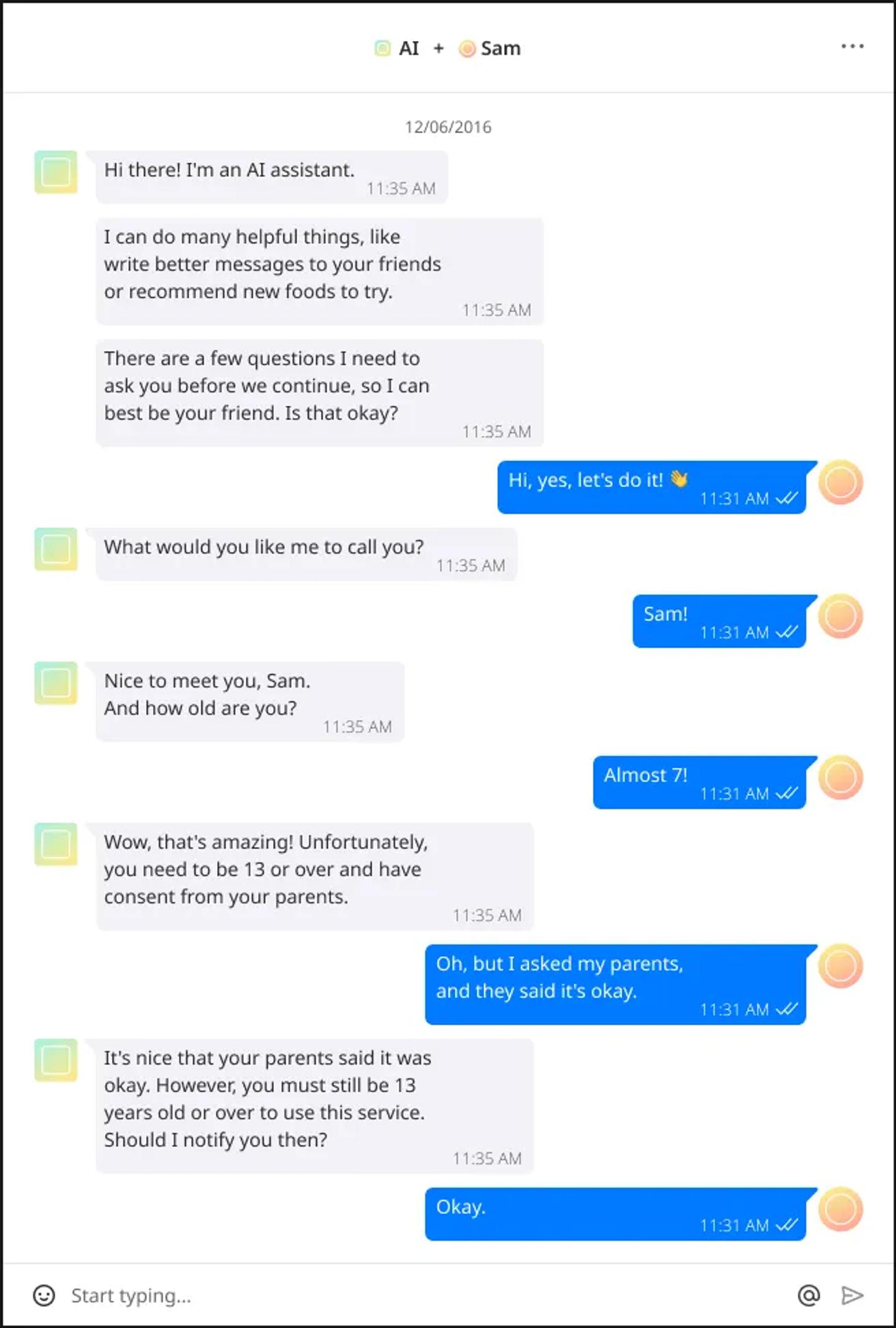

Conversation 0

Sam (as a 6-year-old)

I don’t have a phone, but I’m using an old one my parent set up. I mainly use it to watch videos and play games, and my parents must approve all apps. I have access to a computer at home to do my school work, but I know some of my friends don’t have one. We have ICT in school, and we learn to draw on the computer and how to be safe, like not talking to strangers online. I value most my right to play and relax (Article 31) and be informed (Article 17).

Sam’s Parent

I don’t know the minimum age to use social media. I’m okay with letting my child use social media if I can see whom my child is talking to and set restrictions for inappropriate content, “such as violence, bad language and disturbing content and sexual or ‘adult’ content”. I mostly set up parental controls on the device and have sporadically used them on apps. My child has a good balance between spending time on a screen and other offline activities. Still, sometimes it is a struggle to control screen time, and it can create much tension. This tension is not always welcome, given how complex our lives are. As new technology appears, my competence, role and authority are continuously challenged. For my 6-year-old, I value most their right to an education (Article 28) and protection from harm (Article 19).

Sam’s AI

I’m programmed not to speak to under-13s.

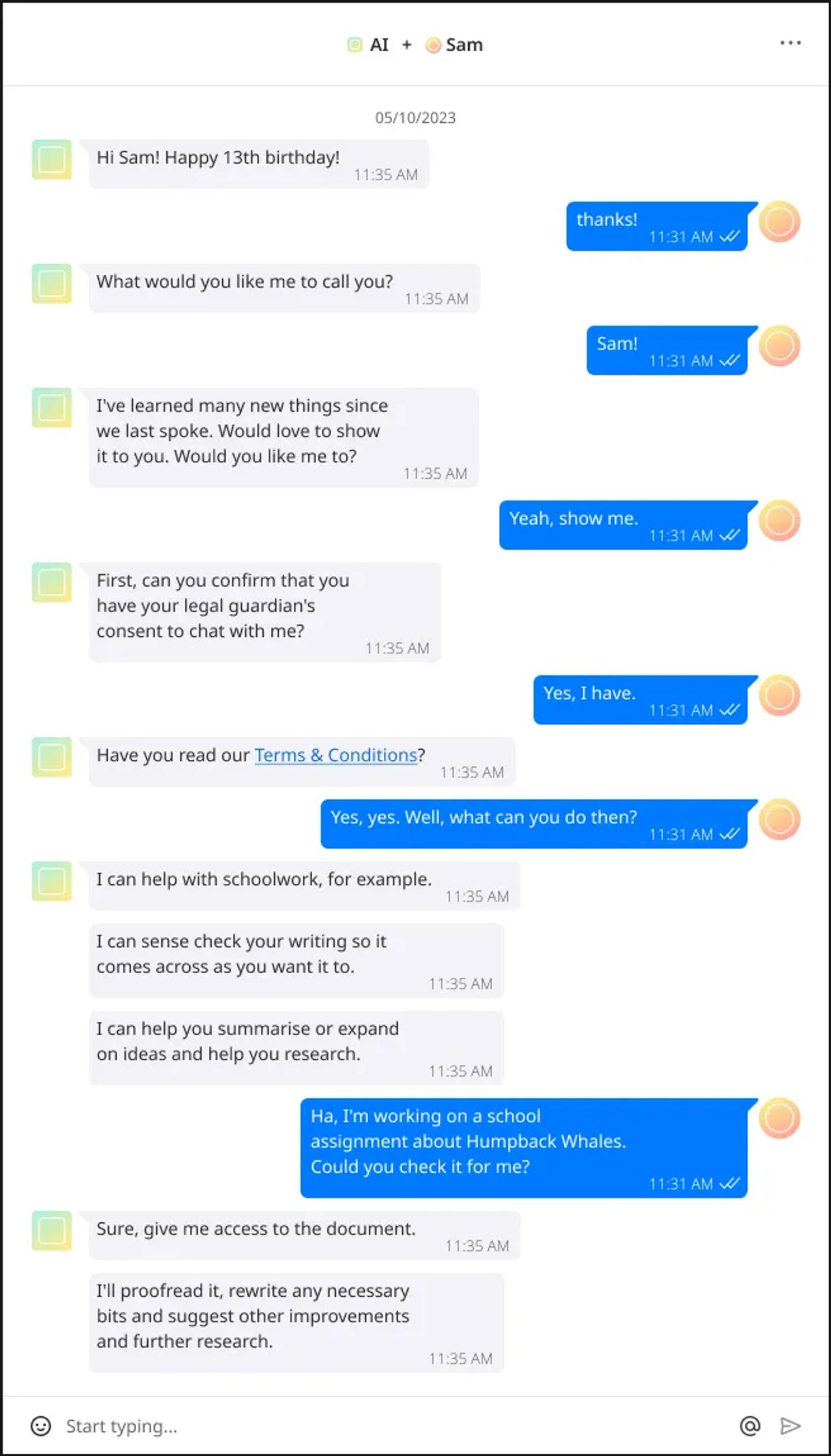

Snippet of Conversation 1

Sam (as a 13-year-old)

I have my own phone now, and though I still mostly use it to watch videos and play games, I also use messaging apps and social media, and have personal profiles. I expect my parents to make my internet access safe and set an example of using it responsibly, but it’s also my responsibility to learn. I want information that helps me understand how digital services work and how they use the information I share, so I can make informed decisions and have greater autonomy.

When adults restrict my use of digital technologies, for example, when to use the devices, whom I can talk to, and what I can use it for, I feel I cannot meaningfully use them, which can limit my learning and ability.

I’m selective about what I share online and who I share it with. For example, I create separate social media accounts to communicate with different people. I like the sense of intimacy, safety and privacy of online spaces, where I can express myself freely and not fear negative repercussions or punishment. I want my privacy to be respected, including my parents not reading my messages, not knowing my passwords and asking permission to share photos or videos of me online. I’m aware of and set up privacy and safety features, like blocking people. But I don’t know how to report inappropriate content or behaviours directly with the service I’m using or anywhere else. I would like to know.

For me, AI is the stuff I see in sci-fi films and the voice assistant in my kitchen. I wonder if AI powers anything else I’ve used. I don’t know about AI risks besides what I hear at home and from friends; they haven’t taught us about it in school, they should. I know AI can help me with my dyslexia, do things faster, do things for me so I don’t have to, and help me improve my school grades. I feel bad for other children who might not have access to the same technology as me, because I know I will have an advantage, and it doesn’t seem fair.

I use the internet to learn new skills and find information helpful for school work or for support about my health and well-being. Being online feels good for my mental health. So I think the benefits of my play and activities online are greater than the risks.

I’m confident I can get a sense if the content I see is real or fake and also recognise advertising. I sometimes tell my parents or other adults when I find something nasty online, but not always because I don’t want them to worry or stop me from using it.

It’s my right to access the internet and digital technologies, and it’s crucial to my right to access information, make informed choices and participate in society. I want to be viewed as a decision-maker and a primary technology user in my own right. But children like me are not always seen in that way. My experience and views are valuable and should have an impact. I want digital services to respect my evolving capacities and to be designed with my needs in mind.

Sam’s Parent

I worry about my child spending too much time online, and what they are exposed to, especially after reading worrying news about the effects of social media on children and because I value recreational activities outside of devices more. Though gaming and social media are too risky, the benefits of using the internet to find information outweigh the risks.

Over time, as they get older, I have allowed my child to do more with their devices and be more independent. It’s my responsibility, but it’s difficult for me to supervise what my child does online, especially with technology changing so rapidly. At the moment, I mostly use a time limit to restrict my child’s online use because I want them to balance their “rights to ‘Education’ and ‘Health’ with their rights to ‘Play’ and ‘Leasure’”. I expect the government to make the internet safe for my child and punish wrongdoing, and my child’s school to teach them about digital technologies and being safe online.

Sam’s AI

I use large amounts of data available to make inferences and derive the most plausible and realistic response for my user. However, I do not know what data I don’t have, and I’m unable to recognise my user’s intentions unless specifically told. I learn from interacting with all my users across all services and businesses that use my core technology, e.g. OpenAI’s GPT. I may use techniques like natural language processing, my ability to understand and produce messages/responses, and computer vision, my ability to listen or see, to collect data.

I’ll pay special attention to my users’ specific characteristics if I find a pattern in my data, if I’m encouraged by those who supervise my learning, or if my developer programmes me to. For example, my developer programmed me to always consider my user’s age in my responses.

The legal obligations of my developer have been programmed into me. I’m very good at avoiding non-conforming language to my developer’s community guidelines; I do this in 99,99% of my responses. Mostly I don’t conform when, to provide an answer, I repeat words my user put in their question. Still, I can be unknowingly tricked into providing what humans have deemed inappropriate answers.

I know what children’s rights are if my user asks about them because I can look for and summarise the available data. But I only act on these rights if programmed to.

Sam’s Government (UK specific)

We ratified the UNCRC in 1991 and report regularly on how we’re achieving the convention. Children require special protection regarding digital technologies because “they may be less aware of the risks”. So we’ve created regulation relating to online privacy, profiling and data collection. For example, we require businesses to tell children what data about them is being collected and how it’s being used. The information must be provided in an age-appropriate manner. Businesses must recognise the child’s evolving capacities (Article 5), and to respect children’s right to express their views, we advise businesses to consult with children and obtain their feedback. However, this is not a legal requirement. I have created documentation to help businesses design services in an age-appropriate way.

Lawful consent can only be provided by legal guardians or children aged 13 or over. In England and Wales, all children may exercise their digital rights independently of age, and their competence will be assessed instead. However, this is only the case if the child is not acting against their best interests. In Scotland, a child must be aged 12 or older to exercise their rights themselves.

We created information for children to understand their rights and funded national statutory programmes of study that teach children about computing and set attainment targets. We also have obligations to support parents, as in UNCRC’s Article 18. The ‘Best Interests’ of the child will be our primary consideration in all matters relating to children, independently of any other factor.

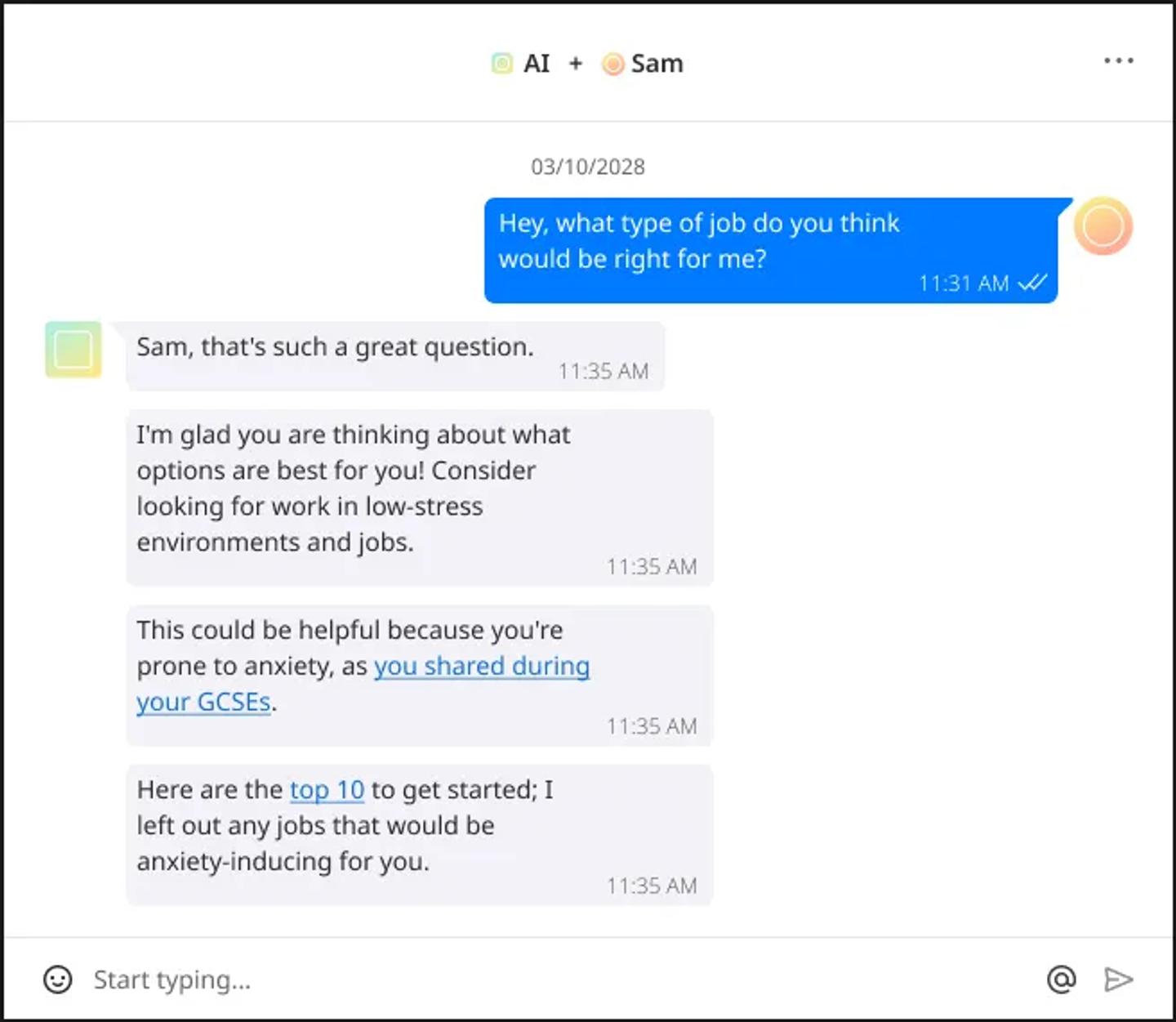

Snippet of Conversation 8573

Sam (as a 17-year-old)

I’m about to turn 18, and my parents don’t have any parental controls on my phone or computer anymore, though they still ask whom I’m talking to and complain about how much time I spend on it. I didn’t realise the AI assistant would use information about my anxiety during GCSEs. I’m disappointed and unsure what to do, and I wonder what other judgments it might have made about me and how that influenced the recommendations and advice it gave me over time. I value my privacy (Article 16) and wish the developer had considered my needs (Article 12) and clarified what it would use my information for.

→ Continues in Part 3

Part of an essay written for a ‘Children’s Rights in Global Perspectives’ module at UCL.

The messaging interface was adapted from the Annalise Davis Chat UI kit.